I filmed a short walkthrough showing how I use Browse AI, and in this article I’ll expand on everything I covered in that video — step by step, with real examples, screenshots, and practical tips. If you’re trying to scrape data from websites without writing any code, automate repetitive data collection tasks, or build monitoring pipelines that push updates into spreadsheets or automations, Browse AI is one of the fastest ways to get started.

In the video, I demoed setting up a pre-built robot against an AI directory, walked through the dashboard, and explained how monitoring, API access, and integrations like Zapier and Make (Integromat) let you take extracted data and plug it into your workflows. I also covered pricing tiers and when Browse AI makes sense for teams versus individuals. I’ll repeat key moments from that demo here, expand on them, and provide actionable guidance so you can set up your first robot in under five minutes.

Table of Contents

- Why Browse AI?

- What Browse AI Can Extract

- Step-by-step: Create Your First Robot

- Monitoring and Scheduling

- Integrations & the API

- Pricing Explained

- Use Cases & Practical Examples

- Best Practices

- Troubleshooting: Common Issues and Fixes

- Security, Privacy & Ethical Considerations

- FAQ

- Advanced Tips & Workflow Ideas

- When to Choose Browse AI vs. Building a Custom Scraper

- Final Recommendations

- Summary

- FAQ (Extended)

- Closing Thoughts

Why Browse AI?

I’ve tested a bunch of scraping and automation tools, and Browse AI stands out for a few key reasons:

- No-code scraping: You don’t need to write scripts or manage proxies. You point the robot at a page, select data elements, and it builds the extraction logic for you.

- Pre-built robots: Browse AI supplies templates for many common targets (Amazon, Etsy, real estate sites, job boards, and more). If you want to extract known page types, these templates often get you live data within minutes.

- Monitoring & scheduling: Besides single extractions, you can monitor pages for changes and run robots on schedules.

- API & integrations: There’s an API key you can generate and built-in connectors for Zapier and Make, so you can plug scraped data into downstream automations (Google Sheets, Slack, social, CRM, etc.).

- Scalability: The credit-based model and ability to add multiple domains make it suitable for teams that need ongoing extractive work at scale.

In short: If you want to extract or monitor data quickly with minimal setup and tie it into automations, Browse AI is a very pragmatic pick.

What Browse AI Can Extract

One of the first things I show when introducing Browse AI is the breadth of pre-built robots. These aren’t just generic scrapers — Browse AI offers templates tuned for specific site patterns. Here’s a non-exhaustive list of things you can grab out of the box:

- E-commerce: Amazon search results, product pages, prices, reviews.

- Marketplaces: Etsy listings and reviews, ticketing sites like Eventbrite, Craigslist postings.

- Real estate: Sites like LoopNet, Zillow, and Airbnb (listings, prices, availability).

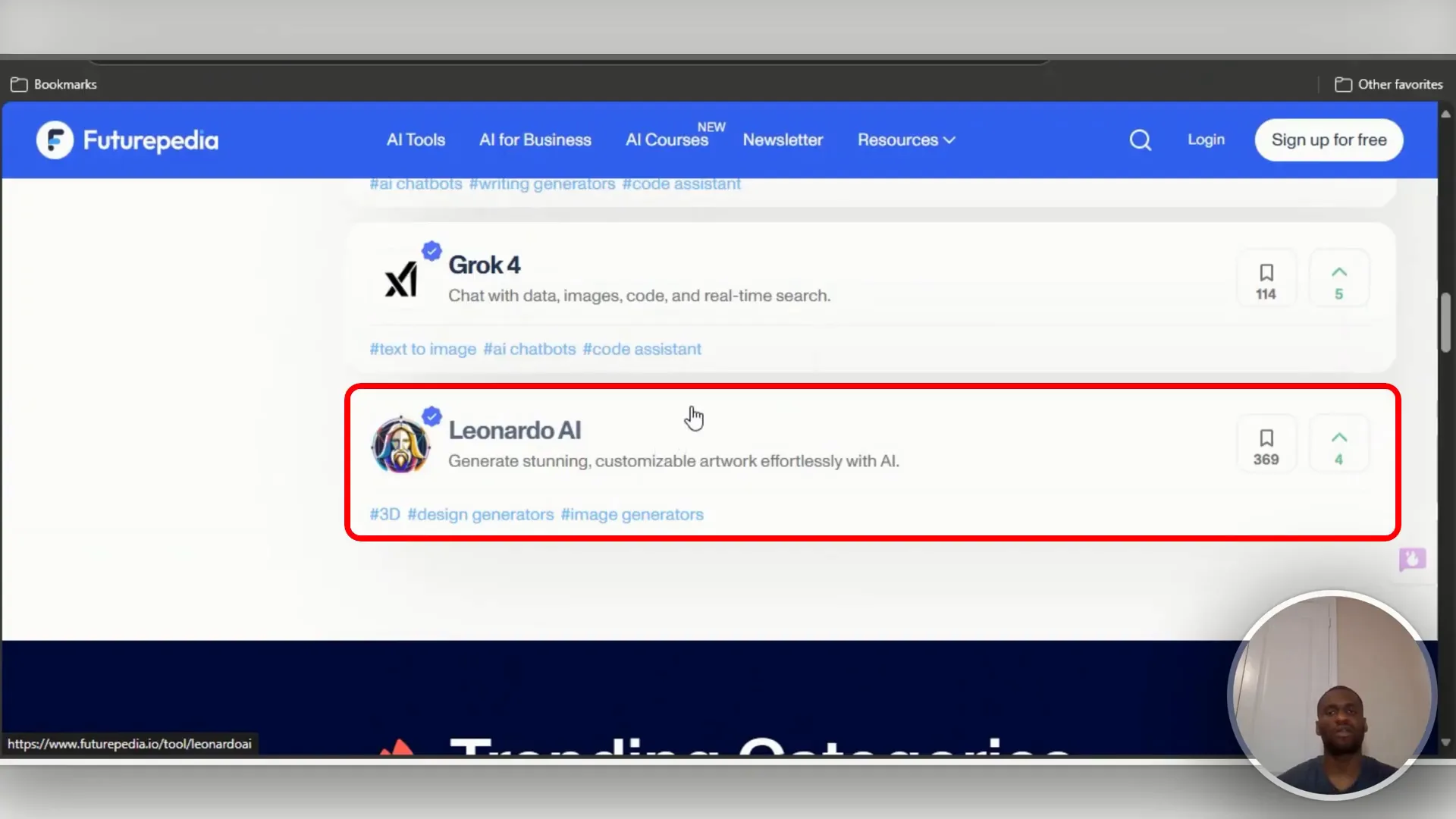

- Directories & AI tools: AI directories such as FuturePedia (tool name, categories, pricing, homepage, score).

- Job boards & freelancing: Candidate lists, job posts, profiles.

- Social & Video platforms: TikTok, YouTube (search results and metadata), LinkedIn lists (with care for terms), etc.

- Search engines & news: Google search results, Bing, Google News, Hacker News, Substack feeds.

- Tech marketplaces: Google Workspace Marketplace, Zapier app directories, and listings on other tech catalogs.

The pre-built robots save a ton of time, because the creator has already identified the DOM elements and the typical layout. You can still customize anything they produce, which is useful when websites change or when you need additional fields.

Step-by-step: Create Your First Robot

Below I’ll walk you through creating a simple robot that extracts the key details of a tool entry from an AI directory. This is the exact pattern I demoed — I used FuturePedia as an example and extracted a tool’s name, categories, pricing, and overall score.

Step 1 — Sign up and log in

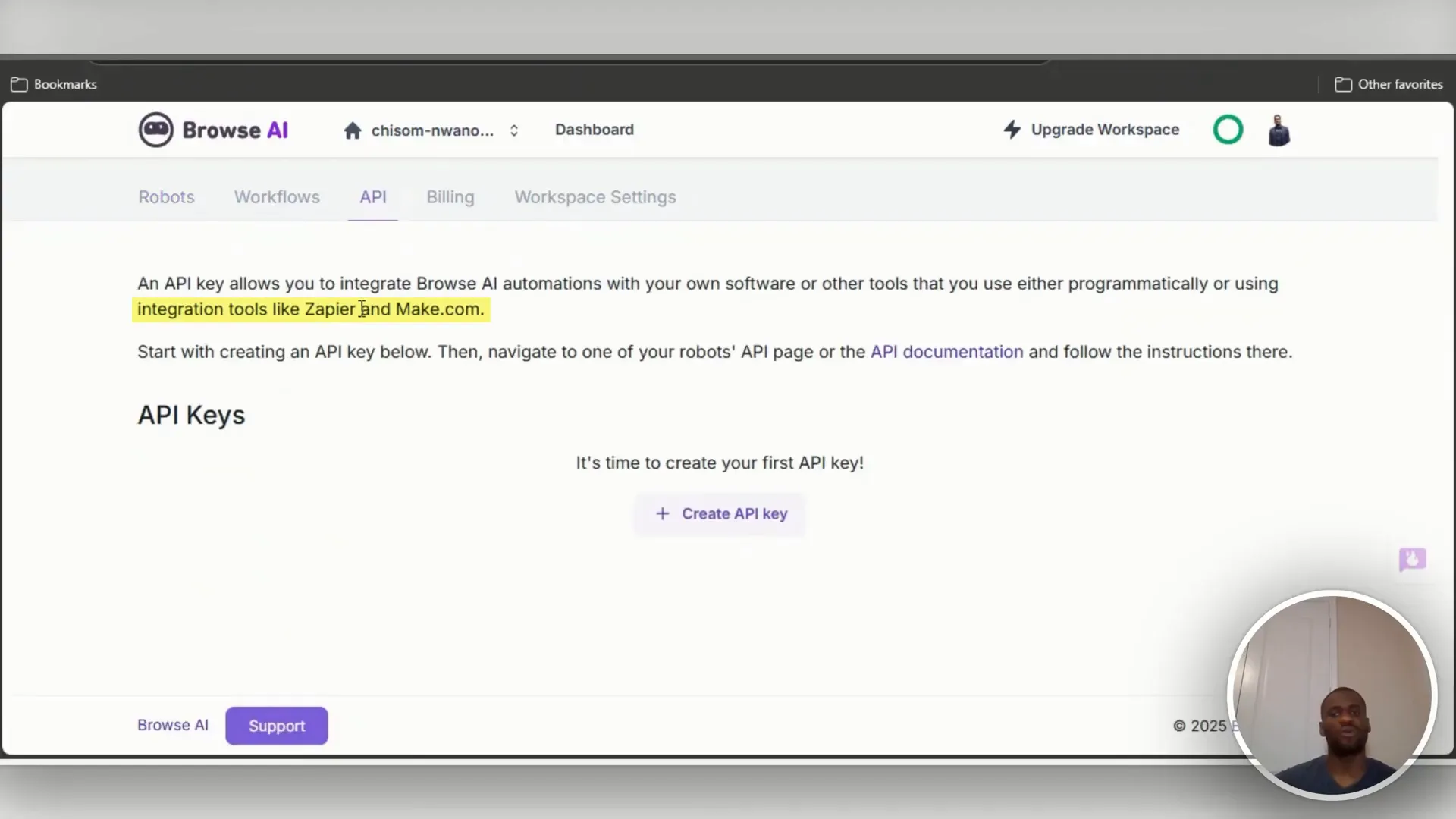

Start at the Browse AI website and either sign up with Google or create an account with your email. If you plan to integrate with Zapier or Make, it’s good to do that now so you can create an API key later.

Step 2 — Choose a pre-built robot or create new

From the dashboard, Browse AI shows a library of pre-built robots. You can search by site or by use case (Amazon, Zillow, FuturePedia, etc.). I picked the “AI detail page” template for FuturePedia as a quick example:

- Click the template for the site you want.

- Click “Check details” (or similar) to preview the fields the robot will extract.

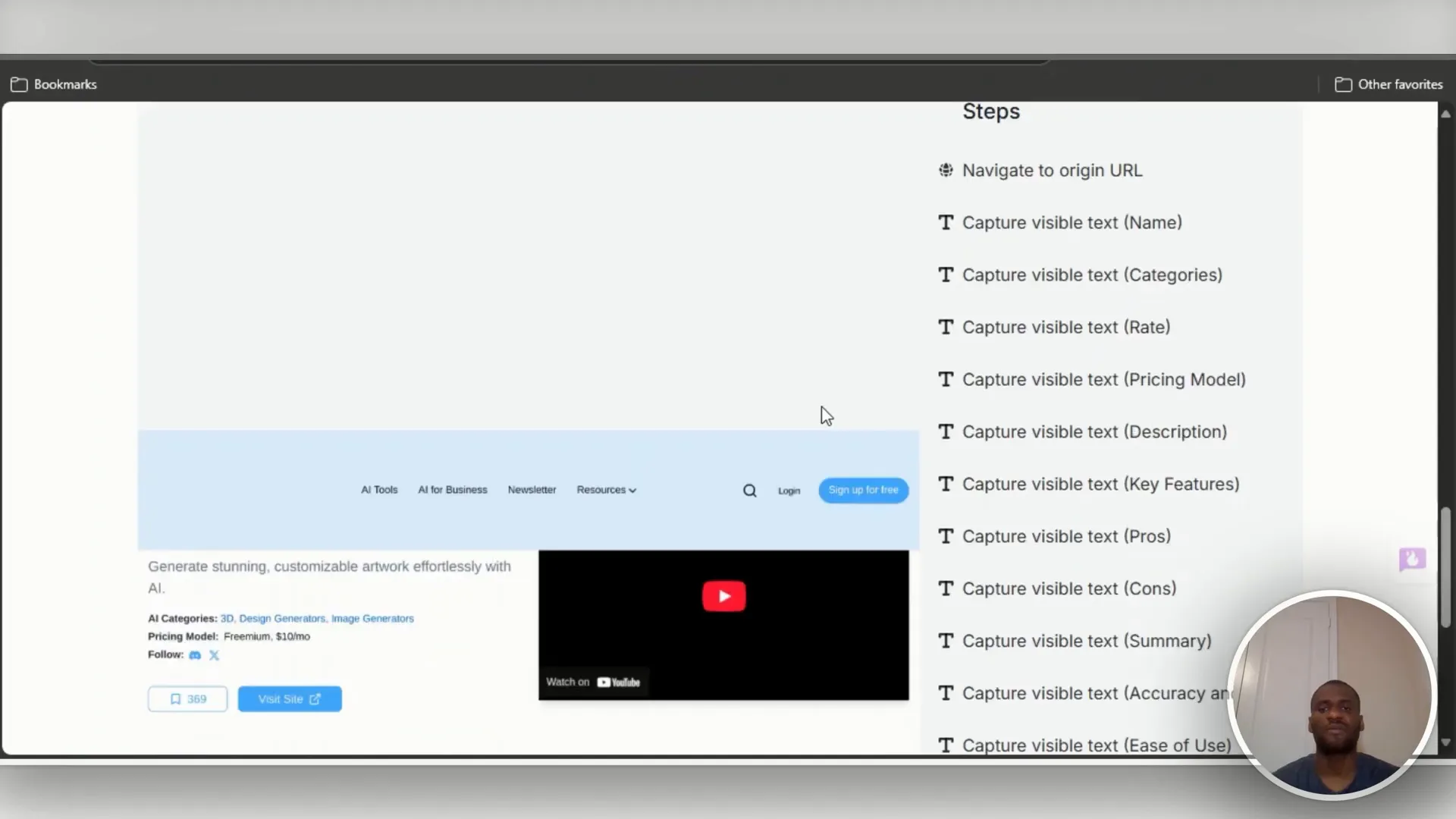

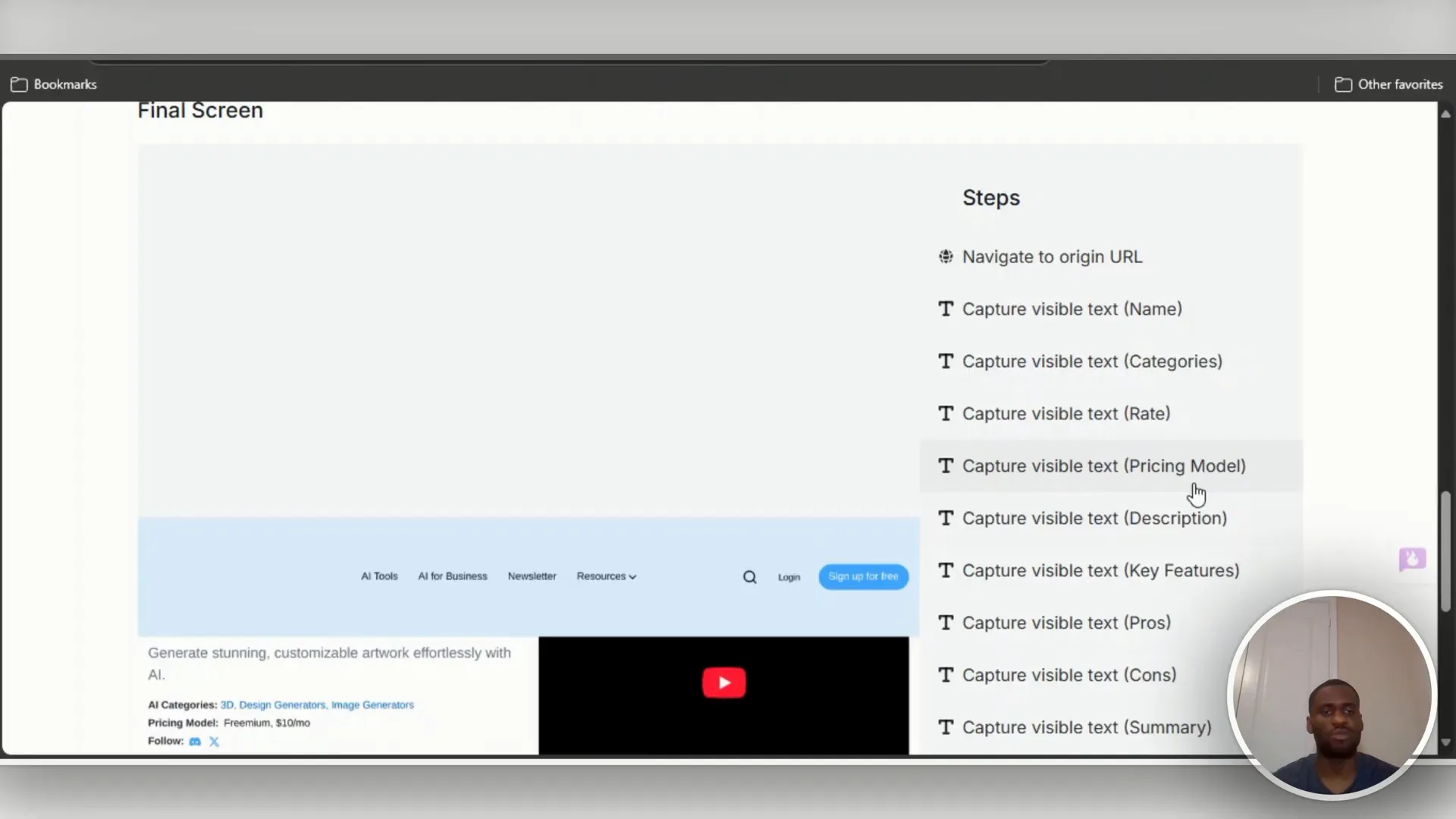

Step 3 — Point the robot to a real URL

Open the target site (for example, futopedia.io or whatever AI directory you’re scraping) and copy the example tool URL. When you finish configuration in Browse AI, the platform navigates to the original URL and captures visible text elements, categories, pricing, and any screenshot you want.

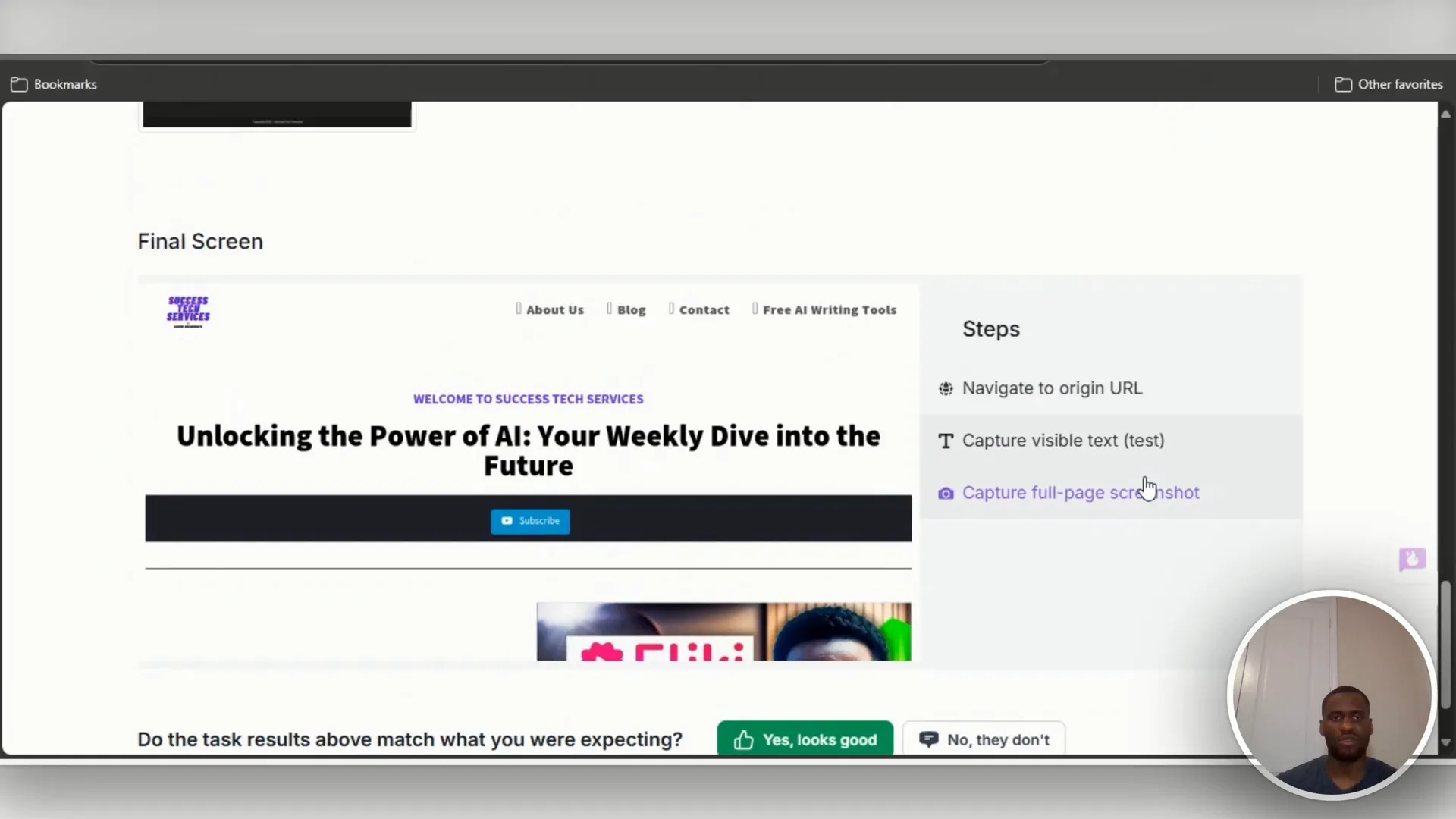

Browse AI’s recorder shows you steps like:

- Navigate to original URL

- Capture visible text (name, description)

- Capture categories

- Capture pricing model

- Take full page screenshot

These are discrete actions you can edit, reorder, or add to if you need more fields.

Step 4 — Finish and run

Click “Finish,” then run the robot once to validate that everything extracted correctly. The robot will return a structured result containing the fields you selected. In my run, I got the tool name, categories, rates, pricing model, overview, and an “overall score” field from the directory page.

Tip: Always run your robot once manually before scheduling. That initial run helps you catch missing attributes or colocation issues (multiple elements matching the same selector).

Monitoring and Scheduling

One of Browse AI’s most powerful features is monitoring. After you’ve extracted data, you can tell Browse AI to keep checking the page on a schedule — hourly, daily, weekly — and it will run the robot automatically and store changes.

Why monitoring matters:

- Price & availability watches: Track price drops on Amazon or product restocks.

- Competitor changes: See when competitors change product descriptions, offerings, or published content.

- Content updates: Monitor article updates, author changes, or published dates for content republishing automations.

When the monitor detects a change, you can have Browse AI send the new data to your integrations (Zapier, Make, or directly into your database via the API) or generate alerts via email, Slack, or webhook callbacks.

Integrations & the API

Browse AI supports direct integration paths that make it a key component in automated pipelines. I always configure the API key and then decide how to use the data:

- Zapier: Create a Zap that triggers on a Browse AI extraction and sends rows into Google Sheets, pushes messages to Slack, or creates leads in a CRM.

- Make (Integromat): Use Make to build multi-step transformations, call other APIs, or normalize data and write to Airtable or databases.

- Direct API: Grab the Browse AI API key and call the API from your own backend to fetch extraction results programmatically.

Practical pipelines I often build:

- Monitor a competitor product page for price changes → When price drops, send Slack alert + create a Google Sheet row with the change and timestamp.

- Scrape newly published blog posts from a niche site → Extract title, author, summary → Auto-post summaries to a team Slack channel and a social scheduler.

- Pull real estate listings from LoopNet → Normalize property attributes (bedrooms, sqft, price) → Feed an analytics dashboard for market trends.

Pricing Explained

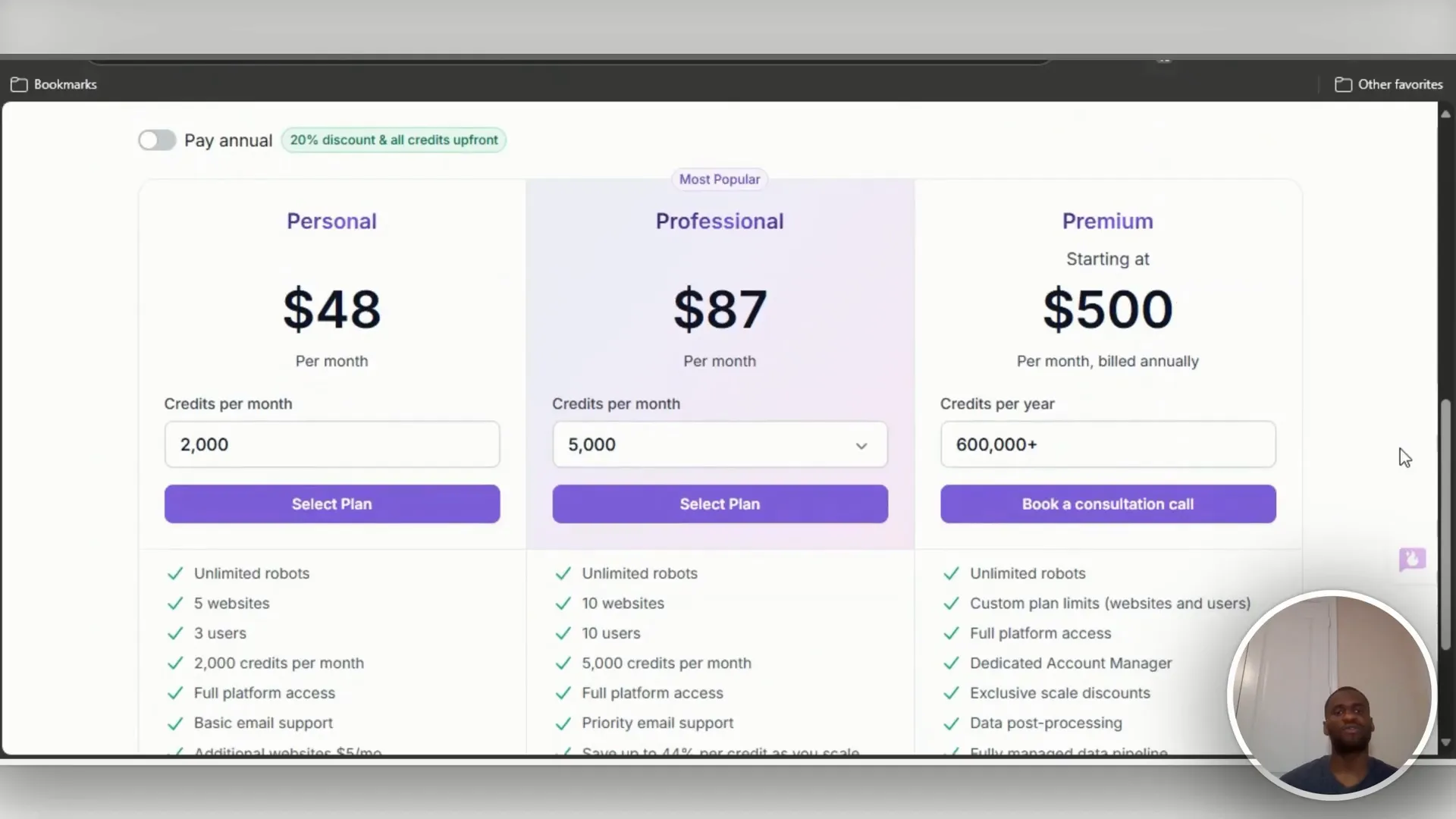

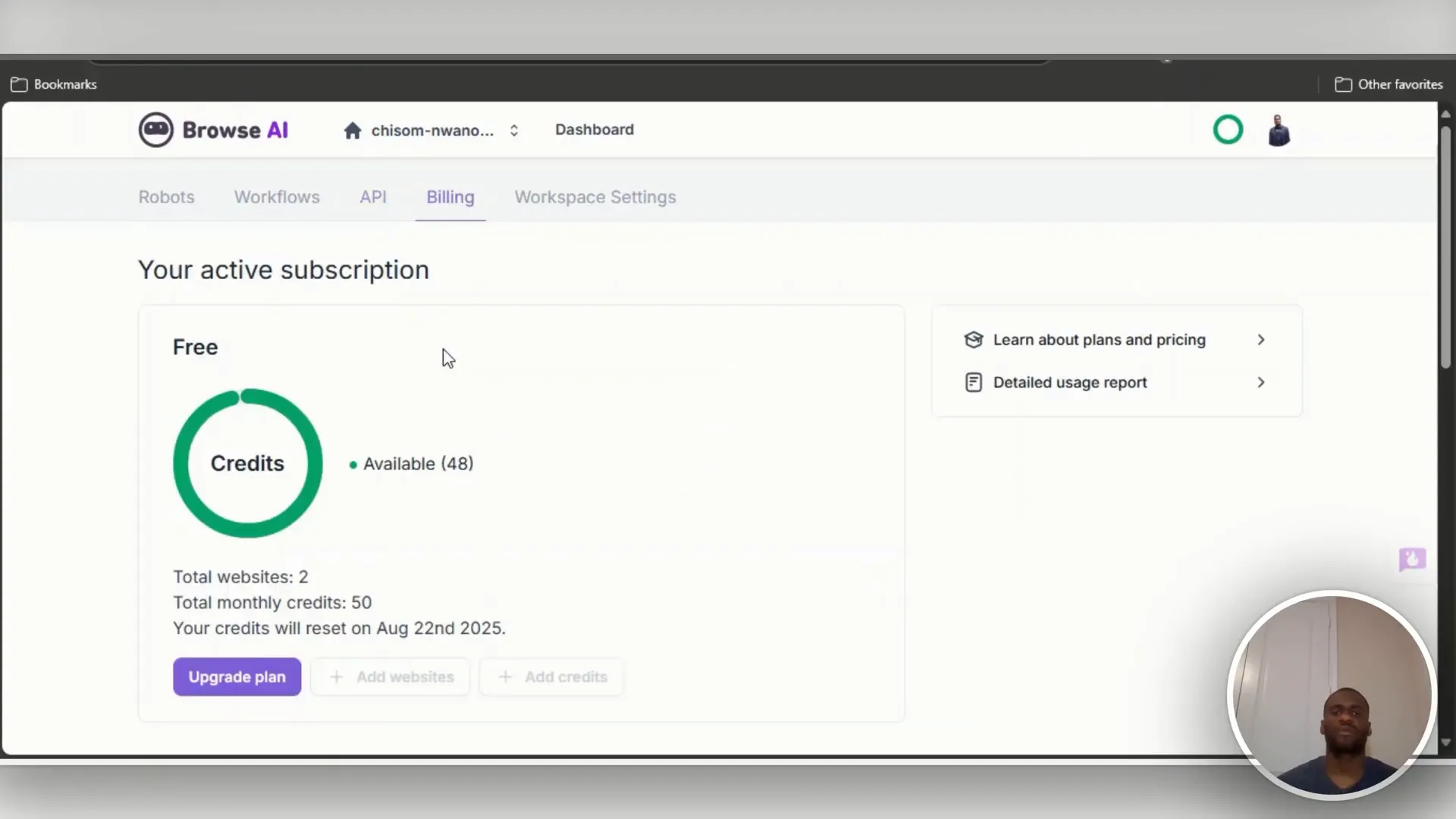

Browse AI uses a credit-based model linked to plans with different limits on domains, users, and credits. Here’s how the typical tiers break down and what that means in practice:

- Starter/Basic: Good for individuals testing the platform. Lower credits (e.g., 50 credits in my personal test), limited websites and users. Ideal to validate robots and run occasional one-offs.

- Pro (example): Around 2,000 credits/month, support for 5 root domains and 3 users, full platform access, unlimited robots. You can add additional websites for a small monthly fee (e.g., $5 per domain).

- Professional: Higher credit allotment (e.g., 5,000 credits/month), more domains (10 sites), and lower incremental costs for additional websites (e.g., $3/month per extra domain).

- Premium or Enterprise: Designed for large-scale scraping needs: very high or effectively unlimited credits (600,000 credits/year in some premium packages), priority support, and broader enterprise features.

How to pick the right plan:

- Estimate how many pages you’ll scrape and how frequently.

- One extraction run uses credits proportional to the complexity and the number of pages crawled; monitor runs increase monthly usage.

- If you’re running monitors on many pages hourly, you’ll need a mid-to-high tier. For occasional research, a starter or pro plan may be sufficient.

Use Cases & Practical Examples

Browse AI is extremely flexible. Below are detailed examples of how I — and teams I work with — use it across verticals.

1. Competitive intelligence

Problem: I need to know when competitor product pages change price, description, or stock levels so our marketing and repricing strategies can adapt.

Solution with Browse AI:

- Create a robot for each competitor product page (or use a template and point it at product lists).

- Set the robot to monitor hourly or daily depending on volatility.

- Send changes via Zapier into Slack + write a row in Google Sheets containing old vs new price, timestamp, and diff.

Benefit: Instant awareness and a historical log for trend analysis.

2. Market research and lead discovery

Problem: I want to pull listings of companies from a niche marketplace or directory categorizing tools or vendors so I can reach out to potential leads.

Solution with Browse AI:

- Use a pre-built directory robot (like an AI directory or marketplace) to extract company name, description, website, contact link, and category tags.

- Pipe results into Airtable or Google Sheets via Make to enrich data (e.g., run emails through a verifier or call a company API for firmographics).

Benefit: A steady pipeline of leads updated regularly via monitoring and enrichment automations.

3. Real estate market analysis

Problem: I need to analyze price per square foot across neighborhoods and detect listing changes over time.

Solution with Browse AI:

- Scrape listing details from LoopNet, Zillow, or other real estate platforms with robots that pull price, address, sqft, bedrooms, listing date.

- Store results in a database and run periodic analytics (price histograms, time-on-market trends).

Benefit: Accurate, time-bound snapshots enabling price trend visualizations and investment decisions.

4. Content aggregation and republishing

Problem: I want to pull the latest posts from several niche blogs and post short summaries to my newsletter or social channels automatically.

Free Stuff! |

|

🎁Get Early Access to the Best AI Tools Before Everyone Else |

Click Me! |

Solution with Browse AI:

- Use robots to extract new post titles, summaries, authors, and links from target websites.

- On detection, push a summary to a Google Sheet, then trigger a Zapier workflow to create a Buffer or Hootsuite post draft or draft newsletter content.

Benefit: A semi-automated content feed you can curate, saving hours of manual research.

Best Practices

When you’re building robots and deploying monitoring pipelines, I follow these principles to maintain reliability and avoid issues:

Design robots for stability

- Prefer stable selectors: Use IDs or explicit class names when possible. Avoid selecting by text that might change frequently.

- Use relative paths: If the site changes layout, relative siblings or container-based selectors are more resilient.

- Include fallbacks: Capture alternative elements or create conditional checks if a primary selector fails.

Respect site terms and rate limits

- Check the site’s robots.txt and terms to ensure scraping is permitted.

- Avoid running high-frequency monitors against sites that block crawlers or have strict rate limits. Use realistic intervals (e.g., hourly or daily) depending on the site.

- If you need high-frequency monitoring on a public API or endpoint, consider reaching out and asking for permission or using an official API if available.

Data quality and validation

- Add validation rules: Check that extracted price fields parse as numbers, dates are valid, and URLs resolve.

- Normalize values in downstream automations: Currency symbols, thousand separators, and different date formats should be normalized before analytics.

- Log extraction errors and establish a review process for mis-parsed entries.

Storage & history

- Keep a historical record. The value often comes from how values change over time.

- Store raw extractions alongside normalized values to aid debugging and audits.

Troubleshooting: Common Issues and Fixes

Robots fail sometimes. I’ll walk through common failure modes and how I solve them quickly.

Issue: Robot returns empty fields

- Fix: Re-open the recorder, load the target URL, and re-select the element. The site may use dynamic loading (JavaScript that renders content after the page load). In that case, add a “wait for element” step or increase page load timeout.

Issue: Extraction works intermittently

- Fix: The page layout may be content-dependent (e.g., different structures for video vs. text posts). Create multiple extraction rules or conditional checks for each layout variant.

Issue: Too many changes in monitor results (noise)

- Fix: Filter out non-essential fields from the monitoring comparison (for example, exclude last-checked timestamps or social share counts if you don’t care about them) or run a daily digest instead of hourly checks.

Issue: Pages blocked or CAPTCHA

- Fix: If you encounter frequent CAPTCHAs, evaluate frequency and headers. In many cases you may need to use a site’s official API or negotiate scraping access. Browse AI’s platform handles many common anti-bot strategies, but high-frequency scraping may still trigger protections.

Security, Privacy & Ethical Considerations

With great scraping power comes responsibility. I always emphasize this when setting up any data pipeline:

- Respect privacy: Don’t scrape personal data that violates privacy laws or platform terms. For example, scraping private LinkedIn profiles or harvesting personal emails without consent can get you blocked and may have legal consequences.

- Check copyright and terms of service: Many sites allow scraping for personal research but prohibit redistribution or commercial reuse. Always read the site’s Terms of Service.

- Be transparent with recipients: If you’re using scraped data to contact people (e.g., outreach), ensure your outreach respects anti-spam laws and is ethical.

FAQ

Q: Do I need to code to use Browse AI?

A: No. Browse AI is a no-code web automation tool. You can use pre-built robots or build robots through a point-and-click recorder. Developers can still use the API for advanced use cases, but coding is not required for most common tasks.

Q: Which websites can I scrape with Browse AI?

A: Browse AI supports scraping most public websites. There are pre-built robots for categories like e-commerce, real estate, job boards, directories, social platforms, and more. That said, some sites explicitly disallow scraping in their policies or put technical protections (CAPTCHA), so always verify terms and adjust frequency accordingly.

Q: How does monitoring work and how often can I run robots?

A: Monitoring lets you schedule robots to run at set intervals (hourly, daily, etc.) and compare current extraction results with previous runs. The frequency you choose should reflect how often the site changes. Very high-frequency runs (e.g., per minute) are usually unnecessary and might trigger anti-bot defenses.

Q: What are credits and how are they consumed?

A: Browse AI uses credits to measure usage. Each run consumes credits based on the number of pages or actions performed. Monitor runs consumed on schedule will also use credits. Check your plan for monthly credit allotments and upgrade if you need more consistent or extensive runs.

Q: Can I integrate Browse AI with Google Sheets or Slack?

A: Yes. Browse AI integrates with Zapier and Make, which lets you send data to Google Sheets, Slack, Airtable, CRMs, and more. You can also directly call the Browse AI API from your backend to fetch extracted results.

Q: Is Browse AI suitable for teams?

A: Absolutely. The professional and enterprise plans support multiple users, more domains, higher credit limits, and enhanced platform access. It’s well-suited for teams that run recurring extractions and need stable pipelines for analytics or reporting.

Q: What if a website updates its layout and my robot breaks?

A: You can edit your robot manually and re-select the new elements. The recorder interface lets you update selectors. For larger changes, you might rebuild the robot or create multiple robots to handle layout variants. Adding fallback selectors reduces downtime.

Advanced Tips & Workflow Ideas

Here are some higher-level techniques I use once I’m comfortable with basics:

1. Build a normalization microservice

When collecting data from many sources, you’ll inevitably get inconsistent formats. I often create a small backend service that accepts raw Browse AI extractions, normalizes numbers, dates, and categories, and writes clean rows to a data warehouse or analytics table.

2. Enrich data automatically

Use Make or Zapier to enrich scraped results. Common enrichment steps:

- Resolve domain to company metadata (e.g., using Clearbit).

- Verify emails or phone numbers with a third-party verifier.

- Call a geocoding API to convert addresses to lat/long for mapping.

3. Create dashboards for historical trend analysis

Export normalized data to a BI tool (Google Data Studio, Looker Studio, Tableau) to create trend charts like price change history, number of new listings by week, or sentiment over time. The insights often justify the scraping effort.

4. Use screenshots for human-in-the-loop verification

When data needs human validation, configure the robot to take full-page screenshots. You can then set up a workflow to push the screenshot and the extracted fields to a review board (Slack channel or Airtable), where a team member inspects and approves or corrects entries.

When to Choose Browse AI vs. Building a Custom Scraper

Browse AI is excellent when speed and low setup time matter. Choose Browse AI if:

- You want to deploy quickly without developing and maintaining scraping scripts.

- You need monitoring and integrations built-in for non-developers.

- You need multi-domain coverage with low administrative overhead.

Consider a custom scraper if:

- You require extreme scale and need to manage proxies, distributed crawling, and IP rotation in-house.

- You have legal or compliance restrictions requiring on-premise scraping solutions.

- You need specialized parsing logic that’s computationally heavy or requires complex AI that integrates directly with your backend.

Final Recommendations

If you’re getting started with data extraction and automation, I recommend the following approach:

- Sign up for Browse AI and try a pre-built robot for a site you care about (e.g., an e-commerce product page, a directory, or a job board).

- Run the robot once manually to validate the fields. Edit selectors if necessary.

- Set up a simple Zapier or Make flow that writes results to Google Sheets or Airtable. This gives immediate utility while you iterate.

- Enable monitoring on high-value pages and set reasonable intervals.

- Monitor credit consumption for a week to decide which pricing tier fits your usage.

For teams, I’d recommend starting with the pro plan (or equivalent) — it balances cost, allowed domains, and credits. As your automation needs grow, upgrade to the professional or premium tiers for larger credit pools and domain allowances.

Summary

Browse AI makes web scraping accessible without code. It’s built for rapid setup, offers a library of pre-built robots for common tasks, and includes monitoring and integrations to convert raw extractions into usable business workflows. Whether you’re pulling competitor prices, collecting leads, or monitoring real estate listings, Browse AI accelerates the process and plugs cleanly into automation tools like Zapier and Make.

I used Browse AI to extract details from an AI directory as a live example, but the same workflow applies across a broad range of sites. With careful selector design, sensible monitoring frequency, and respectful use of site terms, Browse AI becomes a reliable part of your data stack.

FAQ (Extended)

Q: How do I handle pagination or multi-page scraping with Browse AI?

A: You can configure robots to follow “next” links and iterate through paginated lists. The recorder supports actions that click buttons and navigate to the next page. Set a loop limit to prevent infinite crawling. Design your logic to capture items on each page and add them to a single result set.

Q: Does Browse AI provide IP rotation or proxy management?

A: Browse AI abstracts away many scraping headaches, but if you require custom proxy rotation or specific IP management, you should consult Browse AI’s docs or contact support. For typical usage, their platform handles request routing. For high-volume or compliance-sensitive scraping, consider enterprise options or custom proxies.

Q: Can Browse AI scrape sites that use infinite scroll?

A: Yes. The recorder can simulate scrolling actions to load additional content and capture elements that appear as you scroll. You may need to add wait steps and ensure the robot scrolls slowly enough to trigger the lazy-loading behavior.

Q: What file formats can I export to?

A: You can export results to CSV, JSON, or integrate directly with Google Sheets, Airtable, or databases via Zapier/Make. The API also returns JSON payloads for programmatic consumption.

Q: How do I maintain long-term reliability of robots?

A: Periodically review robot results and set up alerting for extraction failures. Keep a small backlog of robots to monitor for layout drift. Where possible, prefer structured endpoints (APIs) from target sites, and use robots for data not available via official APIs.

Closing Thoughts

Browse AI is a practical, no-code solution that helps individuals and teams collect and monitor web data quickly. Use it to prototype ideas, run market research, automate lead generation, and power analytics dashboards. If you combine careful robot design, thoughtful monitoring cadence, and respectful use of target site policies, it becomes a powerful addition to your automation toolkit.

If you’d like, I can walk through a specific use case for your business — pick a target website and I’ll outline how I’d set up robots, monitoring, and downstream automations step by step.